|

Can Pouliquen PhD candidate, Engineer News: I'm actively engaging with researchers and engineers about working on impactful deep learning systems. Don't hesitate to contact me for a quick chat if you think we might be a good fit ! :-) Hello, I'm a final year PhD student in machine learning at the Ecole Normale Supérieure de Lyon under the supervision of Mathurin Massias, Titouan Vayer and Paulo Gonçalves in the Inria OCKHAM team led by Rémi Gribonval. My research interests lie at the intersection of deep learning, optimization and statistics. I've recently transitioned into Transformers and applied deep learning. I also have a strong interest in software engineering and am very keen on Python. Outside of research, I enjoy long-distance mountain running, weighted calisthenics and various types of outdoor activities. |

|

Research & ProjectsI'm interested in deep learning in the broad sense. After working on structure learning for a while, I've recently been exploring generative modelling with Transformers. Here are some of the research and/or open-source software projects I am working on or have worked on, ordered by recentness. |

|

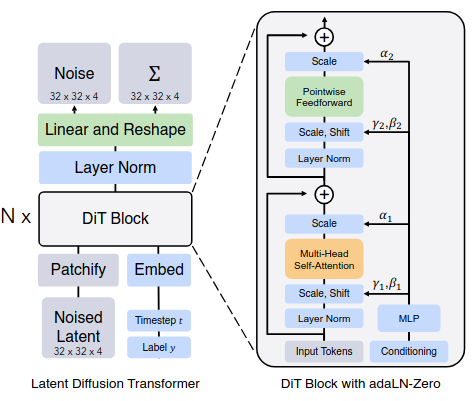

Closed-source project around Transformers and diffusion

We are working with some colleagues on exploring Transformer-based approaches for diffusion. Feel free to reach out if you are curious :) |

|

Tiny-LLaMA

GitHub Repo This is an another ongoing project where I'm building a small LLaMA-like LLM from scratch. My goal is to understand in details what's under the hood of these models so I try to make the code as reusable as possible for future reference. This project is paired with weekly tutorials I give to our lab at the ENS de Lyon, and another smaller project Tiny-Tokenizer on building a BPE tokenizer from scratch. |

|

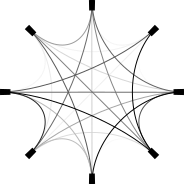

Schur's Positive-Definite Network: Deep Learning in the SPD cone with structure

Can Pouliquen, Mathurin Massias, Titouan Vayer ICLR, 2025 paper / code We introduce SpodNet, the first deep learning layer that guarantees outputs as SPD matrices with additional controllable structure like sparsity. |

|

Implicit

differentiation for hyperparameter tuning the

weighted Graphical Lasso

Can Pouliquen, Paulo Gonçalves, Mathurin Massias, Titouan Vayer GRETSI, 2023 paper We design an algorithm for computing optimal hyperparameter(s) of the Graphical Lasso by solving a bi-level optimization problem. |

|

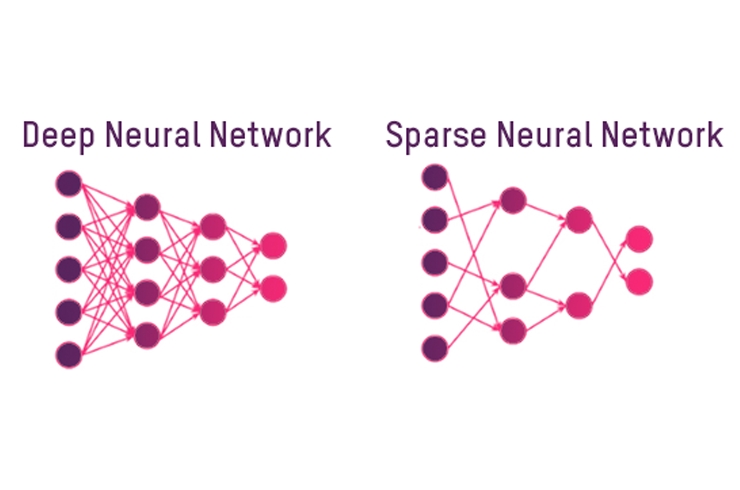

Can sparsity improve the privacy of neural networks ?

Antoine Gonon, Léon Zheng, Clément Lalanne, Quoc-Tung Le, Guillaume Lauga, Can Pouliquen GRETSI, 2023 paper We investigate whether sparsifying the weights of neural networks improves their robustness against membership inference attacks. |

Skills |

Programming |

Around 5 years of experience with Python in prototyping and development in both research and industry environments. Proficient in various frameworks (PyTorch, NumPy, scikit-learn, SciPy, etc.) and version control (Git, GitHub). Experienced with development on computational clusters and GPUs. Around 2 or 3 years of experience with C in low-level embedded systems development in academic and industry environments Prior practical experience with C++, Rust, MATLAB, Assembly |

Reviewing |

NeurIPS 2023, 2024 ICLR 2024, 2025 ICML 2024, 2025 Electronic Journal of Statistics 2025 Gretsi 2023, 2025 |

Teaching |

ENS de Lyon: Convex optimization M1 (2023-2024) CPE Lyon: Optimization algorithms M1 (2023-2024) Ecole Centrale de Lyon: Probability theory L3 (2023-2024) |

Spoken languages |

French, Turkish: mother tongues English: full proficiency German, Spanish: working knowledge, I've passed B1 exams in both |

Contact |

|

Website's template from here. |